Supercharging Medtech with purpose built AI

We have been responsible for building the AI components in several end-to-end implementations and we are proud to be able to help make patient’s lives better through AI.

Supercharging medtech with purpose built AI

In recent years, the Medtech sector has increased its utilization of machine learning to make smarter and more efficient medical products. We have been responsible for building the AI components in several end-to-end implementations and we are proud to be able to help make patient’s lives better through AI.

Since the dawn of Modulai, we have been passionate about helping others and using our core competency to make peoples’ lives better. Early on, we paired up with a small startup focused on diabetes. We ended up leading the development of the AI components. The mission was to make a CE-approved medical product, used by clinicians, to have it give advice on patients’ insulin dosage management. Together with researchers at Uppsala University, we developed several novel ML-based techniques for identifying various patterns in patients’ glucose measurement history. Techniques that ended up in patents as well as in a research publications.

Together with KI (Karolinska Institutet), we developed a deep learning-based system to identify intermittent atrial fibrillation (AF) in patients by using a lightweight, easy-to-use device built by Zenicor Medical Systems. A collaboration that led to a research publication and a new method to screen for AF both faster and cheaper than existing methods.

Furthermore, we’ve developed models for predicting risk-of-failure in the shipping of critical pharmaceuticals. Which we did by using vast datasets of minute-level embedded temperature measurements and weather data.

The journey continues within Medtech; we’re currently engaged in various projects, such as; helping people with rheumatoid arthritis (RA) understand their disease better. We’re helping dermatologists in finding the right treatment for their clients and help develop a smart device that private individuals can use to screen their teeth for caries. We are proud to be part of each initiative within Medtech and we are convinced we will ultimately benefit ourselves from the projects we help develop.

Challenges specific to medtech

Data and regulations

Naturally, the data generated from patients is inherently sensitive since it contains information about a patient and a condition they may have. Therefore, we make sure to work with anonymized data in the development phase and remove any data that can be directly connected to a specific individual. Furthermore, the data that is used has to be collected with the users’ consent. The terms and conditions of the product have to clarify how the data is going to be used. Research projects have to undergo an ethical assessment, before they can be used, to ensure that the positive potential justifies the use of the data.

If a system using ML is going to be used on humans to diagnose, predict, observe, or in any other way be used according to MDR (EU 2017/745) it will be classified as a medical device and needs CE approval before being marketed. Depending on the usage, the product is classified into different categories, and each holds various degrees of control in order to be approved. This requires the system, ML included, to be documented and adequate measures need to be taken to ensure it’s used in the way it was intended. This causes the process to be more extensive compared to systems used in other industries. But in return, the process serves as a safety mechanism for what can, and cannot be marketed for medical use, ultimately creating safety for patients and medical professionals.

Balancing actionable insights

No model is perfect, which sometimes results in wrong predictions. The same is true for humans, but humans can make a collective decision, weighing factors that are hard to quantify. A good approach is to let the ML and humans (whether it is a medical professional or the patient) take different roles in helping the patient. ML models can create a lot of value when screening lots of patients, and give indications and summaries of large and complicated datasets. ML can give advice and facilitate the best way forward. The medical professional or the patient can then use this information and make a decision going forward.

Furthermore, it’s crucial to assess exactly how trustworthy the predictions from the ML models are when used in real life. Also, it is important to present the predictions in such a way that when a model makes erroneous predictions, the negative effects are minimized. This way we can estimate the potential adverse effects of using the model and weigh it against the known positive effects of using the same model.

Adverse effects can be mitigated by e.g. withholding a prediction if the prediction confidence is below a certain threshold, if the quality of the data is lower than a set minimum, or if the person belongs to a group of patients which are inherently difficult to assess.

Explainability

The goal of explainable machine learning is to make models and model predictions easier to understand. It can be utilized during model development and later on when the models are used, both by technical and non-technical users. A better understanding of a machine learning model is often beneficial, and sometimes even a requirement, especially in medical applications. For example: in applications where ML models are used to assist humans in decision-making, it is helpful to understand and visualize why the model reached a certain decision or made a specific prediction, as this can give the user more information than just the prediction or decision itself.

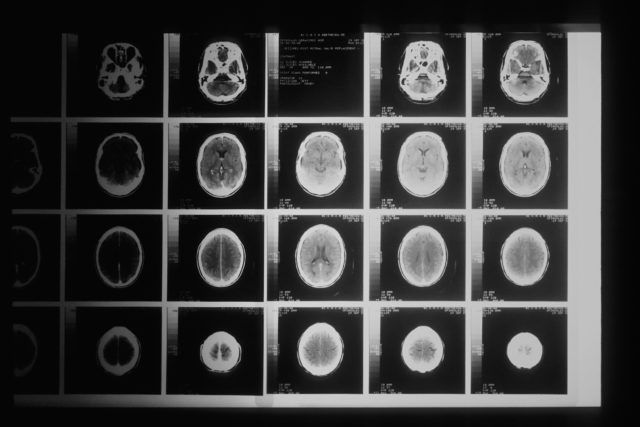

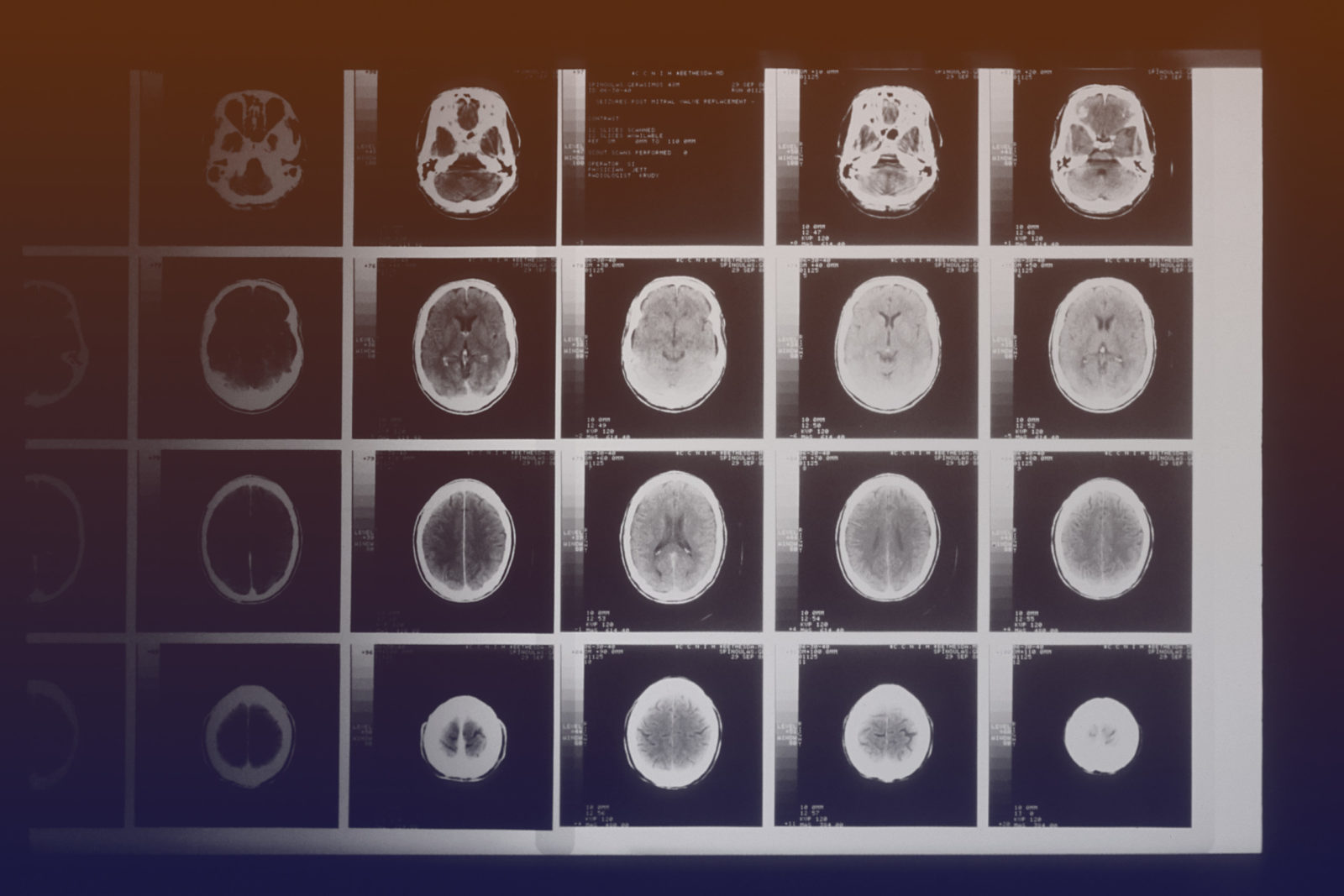

A common tool in Explainable Machine Learning that can accomplish exactly this is to calculate feature attributions for a given prediction. Feature attributions is a method used to quantify how different subsets of the input data, be it the different parts of an image of a brain, the words in a sentence in a clinical journal, or specific characteristics, such as age or sex connected to a patient, contribute to the prediction. If a model predicts, based on answers in a chatbot conversation, that a patient should contact a doctor immediately, the feature attribution algorithm can tell the doctor (or anyone, for that matter) exactly what sentences or what words from the conversion contributed most to the model’s conclusion. It can therefore assess if the conclusion was correct and understand the reasoning behind the decision.