Leveraging 3D Engines for Data Generation in Deep Learning

Introduction

Synthetic data generation has in recent years emerged as an alternative way to manually gather and annotate data sets. The advantages of a data synthesizer are mainly two-fold. Firstly, it drives the cost of acquiring data to almost zero while eliminating almost all data processing work. Secondly, it has the potential to enable quick prototyping of new machine learning ideas even when data is not available. To put it simply, a well-constructed synthetic data generator produces data in a ready-to-go format for any machine learning case. However, the challenge with synthetic data generators is that they are often domain-specific with a narrow application area and far less mature than methods for network architecture and learning.

In the field of computer vision, synthetic data generation is especially interesting since the number of relevant resources and tools has grown and improved significantly over the years. The development has not been in the field of machine learning though, but rather in game engines such as Unreal Engine, Blender, and Unity. Often produced by professional designers, realistic scenes are produced offering great details as visualized in the video below.

Modulai has deployed models trained on synthetic data in several projects and now seeks to understand the potential of 3D rendered data for solving computer vision tasks. Specifically, in this project, the task is to generate synthetic data images consisting of text using Unreal Engine. The data will be used to train a network with the aim of detecting text in images captured from real environments.

Synthesizing scene text data using static backgrounds

The scene text detection application has already adopted the use of synthetic data. Almost all state-of-the-art networks are pre-trained on synthetic data and then finetuned on real data. The most common synthetic data set used for pre-training is the SynthText data set [1]. The data set is generated from a set of 8,000 background images. By pasting text of various colors, fonts, and sizes in different locations of the background images, a data set of 800,000 images is generated. Below are two examples from the SynthText data set.

SynthText provides a base methodology and a set of principles for generating scene text data. The most important takeaway is that text should be embedded in a way that it looks as natural as possible. As a result, the rendering engine aims to produce realistic scene-text images where text is blended into suitable regions of background images using semantic segmentation and depth estimation. In Figure 2, both a suitable and an unsuitable region are visualized.

The procedure of pasting text on background images results in scenes different from what humans naturally observe in a real-world environment. Text obtained from the real world may possess variations of viewpoints, illumination conditions, and occlusions. These variations are difficult to produce by inserting text into 2D background images.

Synthesizing scene text data using a 3D engine

With game engines narrowing the gap between realistic and synthetic worlds, it is natural to explore the possibilities of game engine-based synthetic data generation. The advantages of game engines are, first of all, that real-world variations can be realized by rendering scenes and text as a whole. Furthermore, ground-truth scene information is provided by the 3D engine, resulting in more realistic text regions among many advantages.

This project uses the game engine Unreal Engine 4. When using a game engine to render scene text images, the work from [2] provides four modules. These modules provide a baseline for obtaining images with text by a game engine, executed in the following order.

- Viewfinder: The data generator is initialized with the viewfinder to automatically determine a set of coordinates in the scene suitable for text rendering. This is done by moving a camera around the 3D scene.

- Environment randomization: Followed by the viewfinder, the environment randomization is applied. The purpose of this module is to increase the diversity of the generated images by randomizing parameters in the scene.

- Text region generation: The text region generation module is then used to find text regions for a valid viewpoint with environment parameters randomized. The first stage is to propose text regions based on 2D scene information. The initial proposals are then refined using 3D information obtained from the game engine.

- Text rendering: Finally, the text rendering module is applied with the purpose of generating a text instance and rendering it into the scene. Further, the bounding box for those parts of the image containing text is obtained in a standard object detection format.

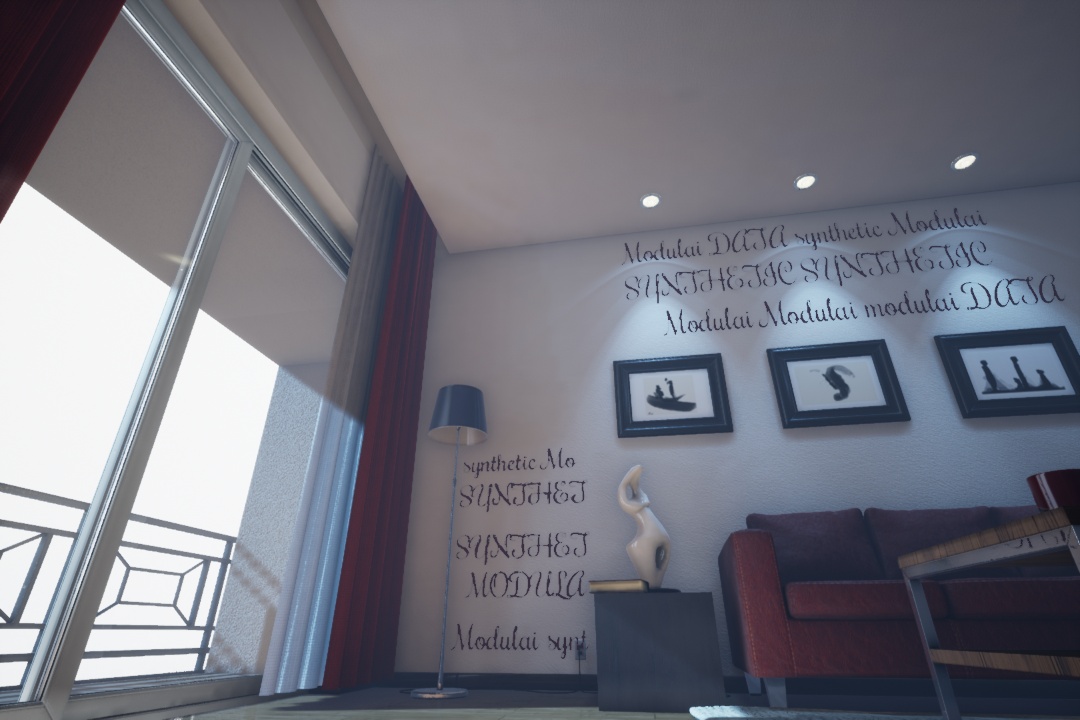

The linkage between the game environment and the data generator is made possible by the UnrealCV package, an open-source project that enables computer vision researchers to easily interact with Unreal Engine 4 [3]. The requirements to start the data generation are a scene with an embedded UnrealCV plugin, and a corpus with text and fonts. Since there are a variety of different scenes available on the Unreal Marketplace, there are endless opportunities for creating custom data sets. However, for the purpose of this project, we use the ready compiled scenes provided by [2]. Examples of images generated using the scene Realistic Rendering from Unreal Engine Marketplace and a customized corpus are visualized in the following figure.

Training of network and results

The network from [4] was trained solely on synthetic images as well as a combination of synthetic data and real data. By comparing the performance of the models trained on 3D engine generated data against SynthText, the effectiveness of the 3D engine for solving the scene text detection problems is then evaluated. When evaluating the models, the test sets from the real-world data sets ICDAR15 [5] and Total-Text [6] are used.

Precision and recall are the used performance metrics for evaluating the predictions of the networks. While the optimal scenario is to achieve both high recall and high precision, a decision needs to be made on how to tune the models. In this work, the probability threshold where the text instance is classified as present or not can be tuned to obtain a desirable metric. By letting the threshold value range from 0 to 1 and reporting the obtained pair of precision and recall for each threshold, the precision-recall curve for a model can be obtained. From the precision-recall curve, the area under the precision-recall curve (AUC) can be used as an evaluation tool for a model.

When training models on data generated from SynthText and Unreal respectively, and evaluating the models on ICDAR15 and Total-Text, the following precision-recall curves are obtained.

When evaluating ICDAR15, the AUC is 0.45 for the Unreal-model and 0.37 for the SynthText-model. The AUC is 0.45 for the Unreal-model and 0.39 for the SynthText-model when evaluating on Total-Text. Examples of predictions on real-world test sets are visualized below.

When fine-tuning the pre-trained models with a small amount of real-world data, the obtained precision-recall curves are the following.

When evaluating the models on ICDAR15, the AUC is 0.77 for the Unreal-model and 0.74 for the SynthText-model. The approximated AUC is 0.62 for the Unreal-model and 0.55 for the SynthText-model when evaluating the models on Total-Text. Examples of model predictions of the finetuned models are visualized below.

Concluding remarks

Generalization on real data is consistently better for networks trained on 3D data compared to models trained on 2D data. Hence, 3D engines can play an important role in the toolbox of any machine learning engineer.

Even if it is difficult, and beyond the scope of this project, to answer why models trained on 3D data achieve higher precision-recall scores, the 3D generated data in Figure 3 seems more realistic to most observers than the 2D generated data in Figure 1. This points to the conclusion that realism does matter. Hence, even if the developments in the field of game production have been driven by the demand for more aesthetically pleasing games that bridge the gap between virtual and real worlds, the results of this work show that those advances also provide great value to the machine learning community.

References

[1] Ankush Gupta, Andrea Vedaldi, and Andrew Zisserman. Synthetic data for text localization in natural images. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2315–2324, 2016.

[2] Shangbang Long and Cong Yao. Unrealtext: Synthesizing realistic scene text images from the unreal world. arXiv preprint arXiv:2003.10608, 2020.

[3] Yi Zhang Siyuan Qiao Zihao Xiao Tae Soo Kim Yizhou Wang Alan Yuille Weichao Qiu, Fangwei Zhong. Unrealcv: Virtual worlds for computer vision. ACM Multimedia Open Source Software Competition, 2017.

[4] Minghui Liao, Zhaoyi Wan, Cong Yao, Kai Chen, and Xiang Bai. Real-time scene text detection with differentiable binarization. In Proceedings of the AAAI conference on artificial intelligence, volume 34, pages 11474–11481, 2020.

[5] Dimosthenis Karatzas, Lluis Gomez-Bigorda, Anguelos Nicolaou, Suman Ghosh, Andrew Bagdanov, Masakazu Iwamura, Jiri Matas, Lukas Neumann, Vijay Ramaseshan Chandrasekhar, Shijian Lu, et al. Icdar 2015 competition on robust reading. In 2015 13th international conference on document analysis and recognition (ICDAR), pages 1156-1160. IEEE, 2015.

[6] Chee Kheng Ch’ng and Chee Seng Chan. Total-text: A comprehensive dataset for scene text detection and recognition. In 2017 14th IAPR international conference on document analysis and recognition (ICDAR), volume 1, pages 935–942. IEEE, 2017.