Few-shot information extraction from PDF documents

Introduction

A lot of data comes in the form of unstructured text documents. Some examples are invoices, offers, and product data assets such as technical information & manuals. To make this data available for analysis, we often want to extract the textual information in the document and convert it to a structured format. In this post, we will take a look at how LLMs with few-shot prompting can accelerate this process.

A toy problem

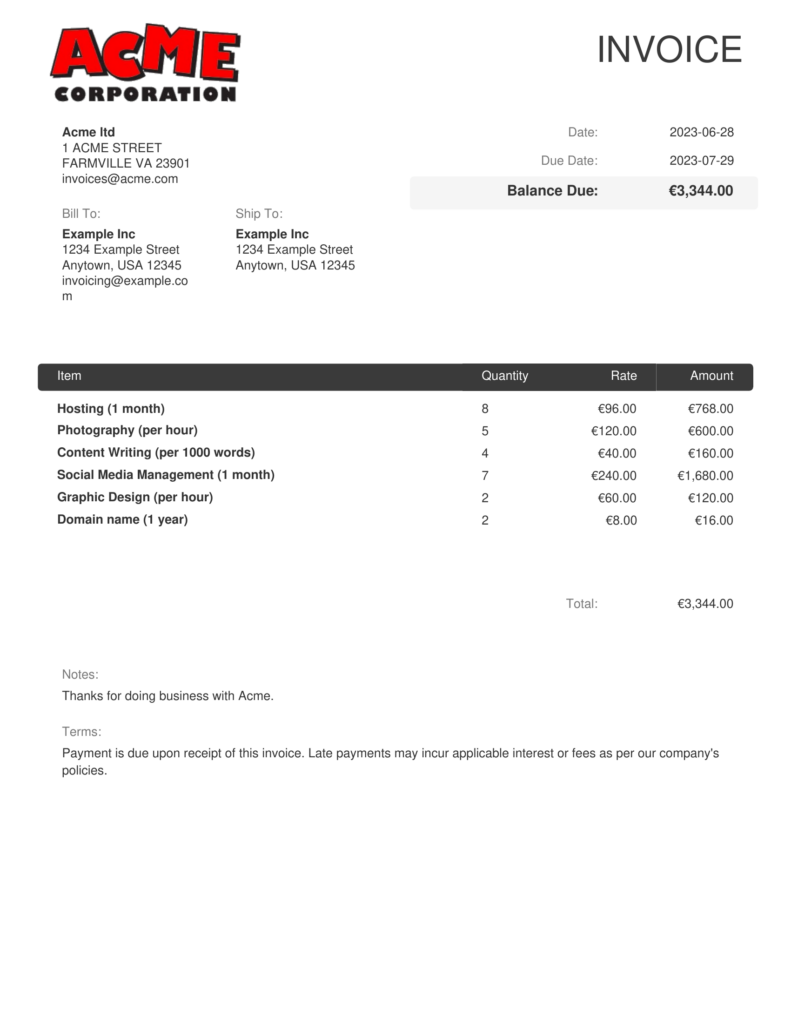

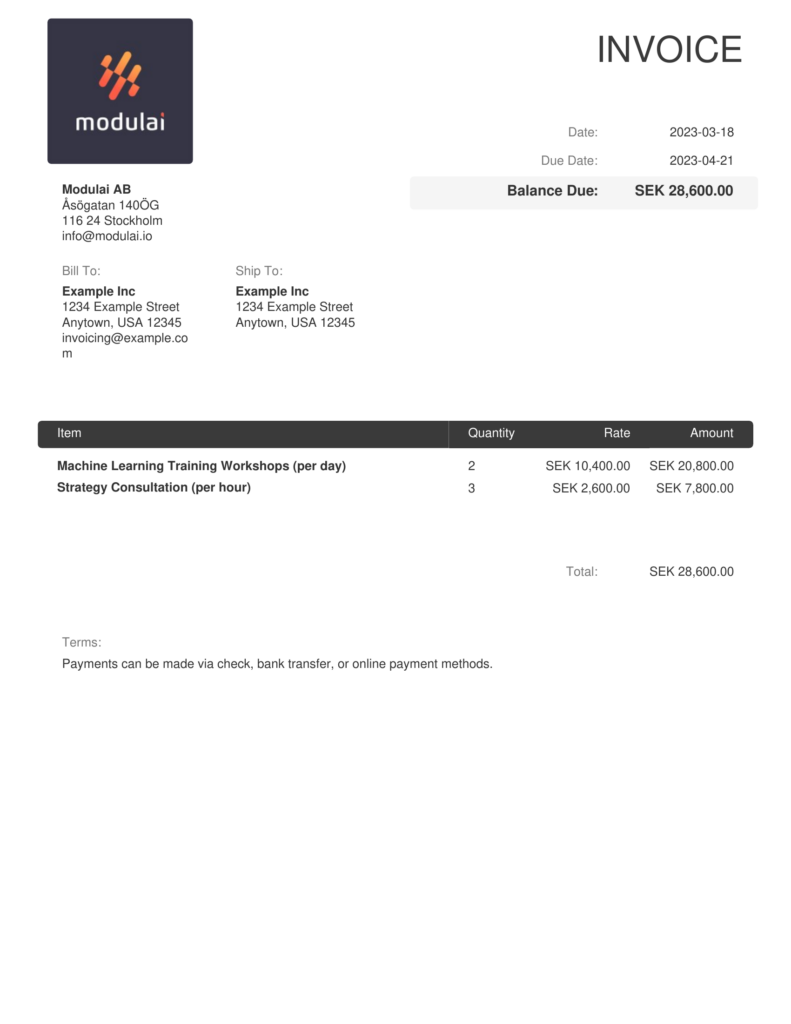

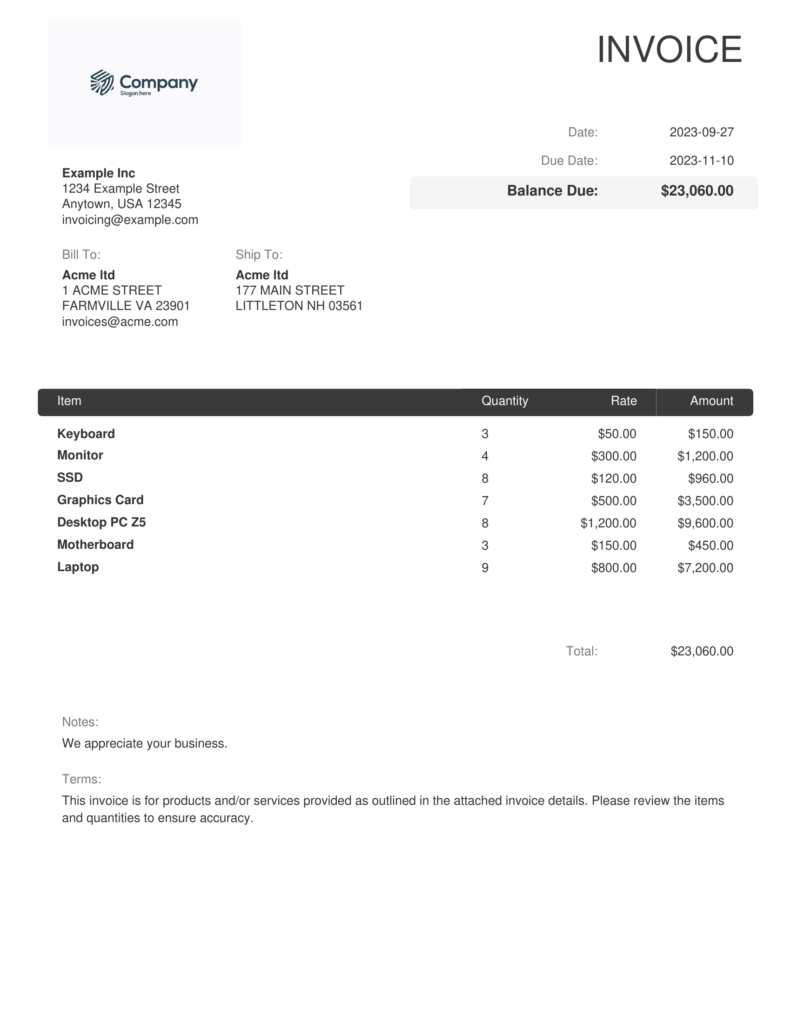

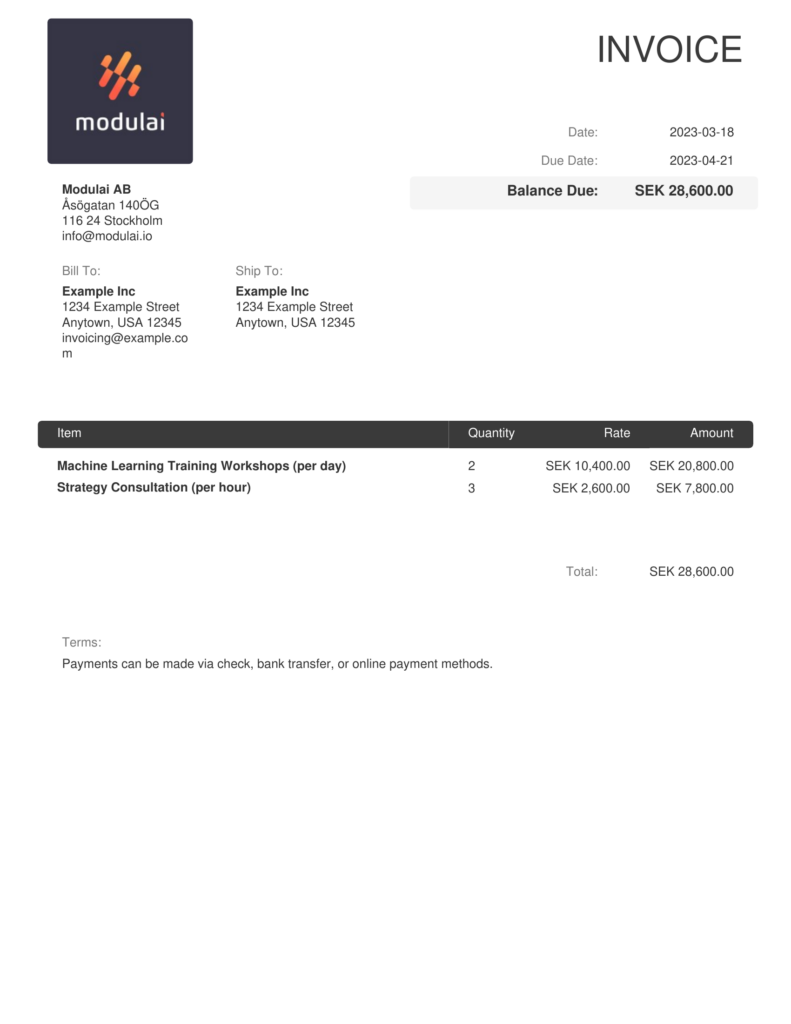

Say we have a bunch of PDF invoices. They have all been exported from the same system, so even if they represent different companies, they are more or less the same format. It is our task to extract information about:

1. Which company sent the invoice

2. Which company was billed

3. The dates the invoice was sent and payment is due

4. The products/services that were billed, how much they cost and in what currency

Let’s take a look at some sample documents first.

In the past, the most straightforward approach would be to extract text from the PDFs, handcraft some regular expressions, and evaluate one PDF at a time. The regex would be updated as needed when new PDFs come in. This is tedious, time-consuming, and error-prone – there is always an edge case.

The few-shot approach

Now, we will try to leverage an LLM to do the heavy lifting for us. In brief, our strategy is as follows:

1. Extract text from the PDFs

2. Define the schema that we want to hold the structured information

3. Label some examples

4 . Create a prompt that instructs an LLM to take the text extracted from a PDF and output the relevant information in the schema we defined. The prompt includes a few examples of a successful parse, using the labels we crafted by hand.

5. Feed the prompt, including examples, and one input document to the LLM and let it perform the extraction task by generalizing from the examples in the prompt

This is a few-shot paradigm because we provide the pre-trained LLM with a few examples. If we had not provided any examples in the prompt, it would be a zero-shot setup.

1. Extracting text from a PDF

The PDF format, while highly portable and flexible, does not easily lend itself to information retrieval. PDFs are designed for presentation which means that the document just needs to look like it’s supposed to. Most PDFs, with the notable exception of scanned documents, do contain the plain text information that they display. From such documents, it is possible to extract the text, though the order of text elements is usually not what one would expect.

Here, we use the pypdf Python library.

from pypdf import PdfReader

def read_with_pypdf(pdf_path: str) -> str:

reader = PdfReader(pdf_path)

page_text = [page.extract_text() for page in reader.pages]

text = "\n".join(page_text)

For the middle example above, we end up with the body of text below. Notice the line breaks in unexpected places, for example before the “:” symbols, and unexpected ordering of some elements, for example, the dates appearing before their respective headers.

print(read_with_pypdf("sample.pdf"))

INVOICE Modulai AB Åsögatan 140ÖG 116 24 Stockholm [email protected] Bill To : Example Inc 1234 Example Street Anytown, USA 12345 [email protected] m Ship To : Example Inc 1234 Example Street Anytown, USA 12345 2023-03-18 2023-04-21 SEK 28,600.00 Date : Due Date : Balance Due : Item Quantity Rate Amount Machine Learning Training Workshops (per day) 2 SEK 10,400.00 SEK 20,800.00 Strategy Consultation (per hour) 3 SEK 2,600.00 SEK 7,800.00 SEK 28,600.00 Total : Terms : Payments can be made via check, bank transfer, or online payment methods.

2. Defining a schema

A key step in extracting structured information is defining the schema we want to represent the information with. To this end, we define a Pydantic model:

from typing import List

from pydantic import BaseModel, Field

class InvoiceLine(BaseModel):

name: str = Field(description="The name of the product or service provided")

quantity: int = Field(description="Quantity of the product or service provided")

unit_cost: float = Field(description="The price of the product or service. Per unit, not the total price")

class InvoiceData(BaseModel):

from_company: str = Field(description="The name of the company that is sent the invoice")

to_company: str = Field(description="The name of the company that is receiving the invoice")

currency: str = Field(description="The currency of the invoice. Either sek (SEK), usd ($, USD) or eur (€, EUR)")

invoice_date: str = Field(description="The date the invoice was sent")

due_date: str = Field(description="The date the invoice is due")

products: List[InvoiceLine] = Field(description="The list of products that are being invoiced")

To guide the model, we provide descriptions for each field. For example, we want to extract the currency as one of three distinct values (“sek”, “eur”, or “usd”) even if we expect the document to contain the €-symbol to indicate the Euro currency. We add this bit of information to the description of the currency field.

3. Labeling some examples

With the schema defined, we can move on to labeling some examples. We go through a few PDFs and create JSON documents with information that match our schema. Let’s take the PDF below as an example.

Based on the document, we enter the following data into our pre-determined format:

{

"from_company": "Modulai AB",

"to_company": "Example Inc",

"invoice_date": "2023-03-18",

"due_date": "2023-04-21",

"currency": "sek",

"products": [

{

"name": "Machine Learning Training Workshops (per day)",

"quantity": 2,

"unit_cost": 10400.0

},

{

"name": "Strategy Consultation (per hour)",

"quantity": 3,

"unit_cost": 2600.0

}

]

}

4. Creating the few-shot prompt

With a few documents labeled, it’s time to generate the few-shot prompt. To do this, we have the Kor library to help us. We take our Pydantic model, our LLM and our examples, and Kor handles the rest.

from langchain.llms import OpenAIChat

from kor import from_pydantic, create_extraction_chain, JSONEncoder

# N.b. the OPENAI_API_KEY environment variable must be set

llm = OpenAIChat(

model_name=”gpt-3.5-turbo”, # ChatGPT

temperature=0

)

schema, validator = from_pydantic(

model_class=InvoiceData,

description="Information extracted from an invoice",

examples=examples, # List of tuples (input, output)

many=False,

)

chain = create_extraction_chain(

llm,

schema,

encoder_or_encoder_class=JSONEncoder,

validator=validator,

)

The examples(input, output) pairs that show the model the result of a successful parse. Let’s take a look at the generated prompt:

print(chain.prompt.format_prompt(text="<text extracted from test PDF>").to_string())

Your goal is to extract structured information from the user's input that matches the form described below. When extracting information please make sure it matches the type information exactly. Do not add any attributes that do not appear in the schema shown below.

```TypeScript

invoicedata: { // Information extracted from an invoice. .

from_company: string // The name of the company that is sent the invoice

to_company: string // The name of the company that is receiving the invoice

currency: string // The currency of the invoice. Either sek (SEK), usd ($, USD) or eur (€, EUR)

invoice_date: string // The date the invoice was sent

due_date: string // The date the invoice is due

products: Array<{ // The list of products that are being invoiced

name: string // The name of the product or service provided

quantity: number // Quantity of the product or service provided

unit_cost: number // The price of the product or service. Per unit, not the total price

}>

}

```

Please output the extracted information in JSON format. Do not output anything except for the extracted information. Do not add any clarifying information. Do not add any fields that are not in the schema. If the text contains attributes that do not appear in the schema, please ignore them. All output must be in JSON format and follow the schema specified above. Wrap the JSON in <json> tags.

Input: <text extracted from example PDF 1>

Output: <JSON label for example PDF 1>

Input: <text extracted from example PDF 2>

Output: <JSON label for example PDF 2>

...

Input: <text extracted from test PDF>

Output:

It is worth pointing out that Kor does nothing beyond generating this prompt for us. We could just as well have created this prompt from scratch ourselves. The benefit of a library like Kor is that it lets us generate the prompt quickly and easily from a Pydantic schema.

5. Running the extraction chain

We’re now ready to put the model to the test.

results = [chain.run(test_text) for test_text in test_texts]

As it turns out, it suffices to provide the LLM with one single example for it to handle 19 unseen documents without error. Some things that stand out with this approach is

- the model’s ability to generalize to currencies that are not represented in the example document

- the automatic parsing of prices as numbers, despite them containing both commas and periods

Closing remarks

The few-shot LLM approach to document information extraction is very straightforward to implement and apply. In this post, we have used OpenAI’s APIs which means there is a small cost associated with each call of chain.run(...). Depending on the use case, it’s worth considering whether a self-hosted model is more cost-effective. For example, if the extraction chain needs to be run regularly for new documents as opposed to a run-once-and-forget scenario.

With the recent announcement of multi-modal LLMs like GPT-4V, it will be interesting to assess their capabilities of extracting the same information given just an image of the PDF. This would make the whole process agnostic to whether or not the PDFs actually contain text data or not, and eliminate the need for any text extraction step.